Two thousand years ago a library was buried in heat and ash. Shelves collapsed. Scrolls became charcoal. Generations later, the same pages are whispering again. The breakthrough did not come from scalpels or glue. It arrived through X-rays, code, and a determined global effort to find letters where the human eye sees none. When people say “AI has deciphered a 2,000-year-old scroll burned in the Vesuvius eruption,” they mean this: algorithms trained on carbon ink are now mapping invisible characters inside sealed papyrus rolls, and scholars are beginning to read actual passages instead of guessing at shadows.

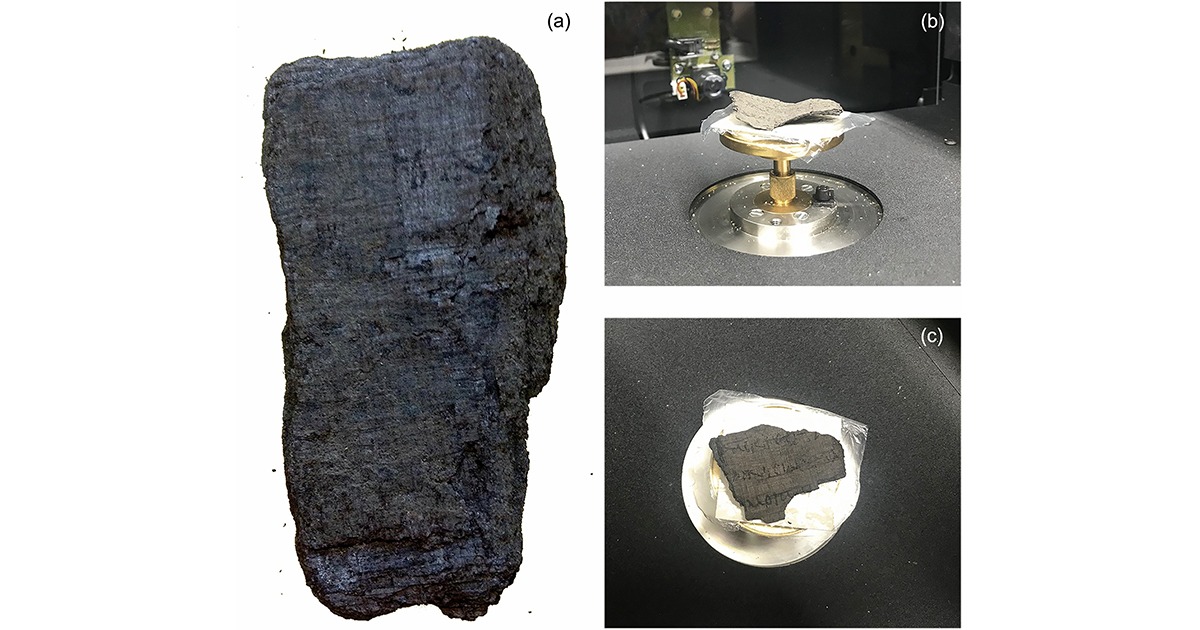

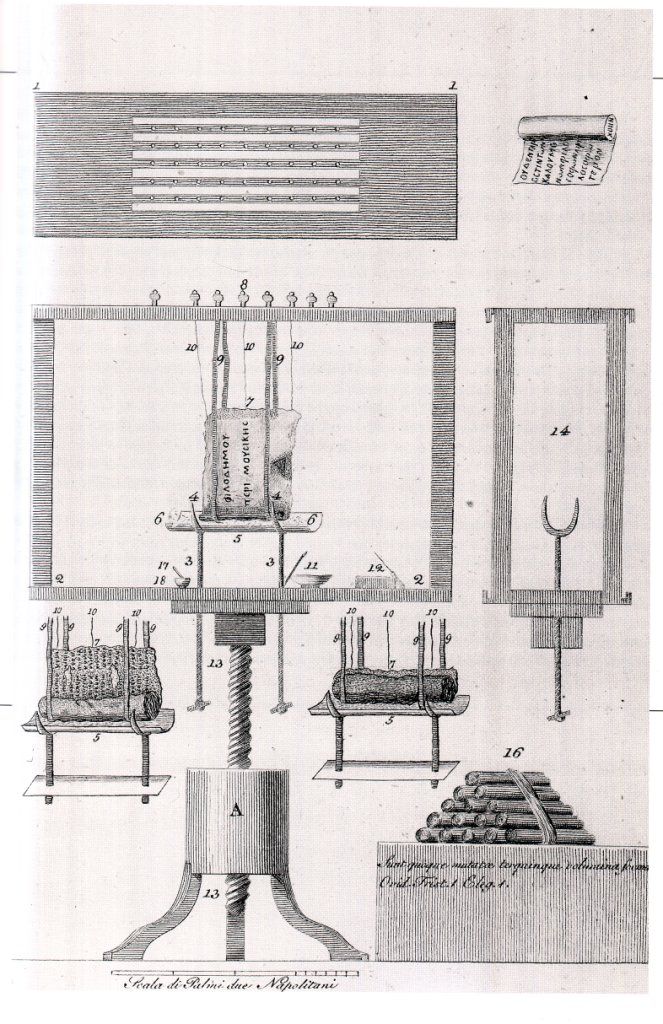

It sounds like a fable. It is also a careful sequence of scans, models, and checks. First, a micro-CT machine records the internal layers of a scroll without touching it. Next, a pipeline called virtual unwrapping models those layers as surfaces. Then, machine learning looks for the subtle texture change that ink leaves in the X-ray volume. Finally, papyrologists confirm the results, letter by letter, against the habits of Greek handwriting and known vocabulary. Each step matters. Together, they turn a charred log back into a book.

What was found, and why it matters now

The Herculaneum papyri make up the only intact library to survive from the ancient world. The collection sits at the Villa of the Papyri near modern Ercolano, a place hit by intense heat when Vesuvius erupted in 79 CE. For centuries the rolls were too fragile to open. Some were destroyed by early attempts to slice and peel. Others broke into flakes. A few lines were saved, yet the core remained silent. That silence has begun to lift. Recent competitions and collaborations have revealed entire columns of Greek text from inside sealed scrolls, including discussions tied to the Epicurean philosopher Philodemus. The texts are not mere curiosities. They comment on pleasure, perception, music, and taste. They show a literary voice mid-argument, not a museum label frozen in amber.

Crucially, these readings are not one-off miracles. New scans, new models, and new training sets continue to push the percentage of readable text upward. That scale changes how historians plan. Instead of hoping for a line or two, teams prepare to confront chapters. With that, the tone of the field shifts from rescue to research. What once felt like salvage now looks like the start of a new workflow for long-buried writing.

How the reading actually works

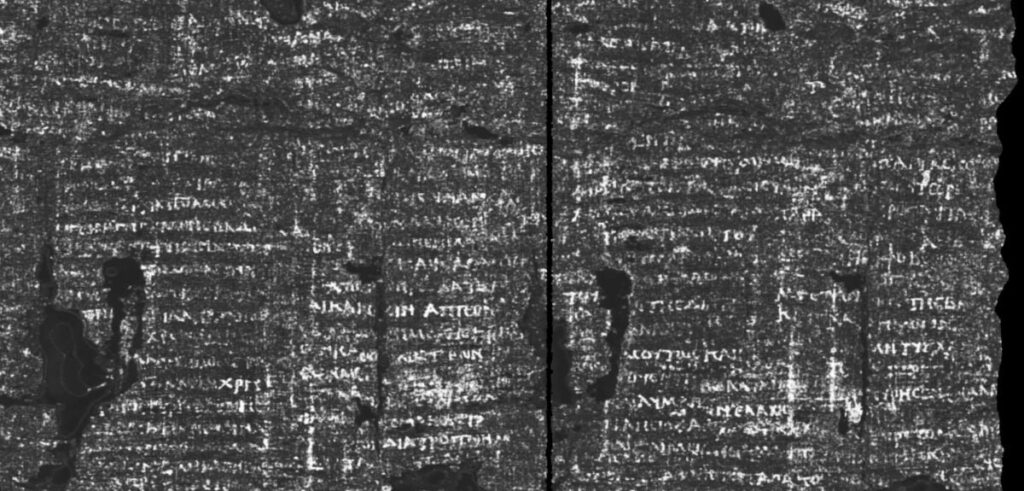

Here is the practical chain. A scroll is imaged at very high resolution using micro-CT or phase-contrast CT. The resulting volume shows layers folded, buckled, and fused. Software identifies surfaces, unwraps them virtually, and lays them flat without tearing a single fibre. On those flattened patches, machine-learning models scan for the signature of carbon ink. That signal is faint. Ink and papyrus are both carbon-rich. Yet they behave differently in X-rays and in the geometry of the fibres. Algorithms trained on labelled fragments learn the difference and mark the likely strokes. Researchers then assemble patches into columns and words. Papyrologists step in to judge where ink is genuine, where artefact, and how letters form syllables. The cycle repeats until a page emerges.

This is not guesswork. It is tested against fragments where the text is already visible. If a model can find the same letters in an X-ray volume that a camera sees on the surface, confidence grows. When three different models point to the same word in the same place, confidence grows further. And when multiple labs can reproduce the result, the reading moves from excitement to evidence.

Why AI was needed

The ink on these rolls is largely carbon. Standard X-ray methods separate materials by how they absorb energy. Carbon on carbon looks like shadow on shadow. The trick was to stop looking for darkness and start looking for texture. Ink lies on top of fibres and subtly changes the surface. In the volume, that leaves a tell-tale pattern machine learning can pick up once it has seen enough examples. In other words, computers learn to see what we cannot. People still make the call, but AI does the first pass at scale and speed.

There is also a social reason. Opening the data brought thousands of minds to the same puzzle. Prize challenges motivated coders, students, and researchers to try segmentation tools, transformer models, and novel loss functions on the same scans. Papyrologists and computer scientists found a common language: does this patch look like ink; can you show it again with a different model; how do we avoid hallucination. The outcome is more robust than a single lab working alone.

From first words to full passages

Early success arrived as a single Greek word. Soon after, longer phrases appeared. By last year, teams had released images showing columns dense with letters from inside an unopened scroll. Those lines point to a treatise that weighs everyday pleasures—food, fragrance, music—and the senses they stir. Not all words are clear. Not all sentences are complete. But enough of the argument stands to anchor commentary and translation. For the first time, the inner voice of a sealed Herculaneum roll speaks in something like full paragraphs.

That change in scale matters. A stray term might excite headlines; a passage changes scholarship. Passages let scholars cross-reference citations, track terms, and match style with known authors. They also give translators context, which reduces guesswork. A full column stabilises meaning in a way a fragment never can.

What the scans reveal about the scrolls themselves

The volumes show more than letters. They reveal how papyrus sheets were rolled, glued, and repaired. They capture folds, tears, and seams. They map voids where air pockets preserved a curve. They even show how heat changed fibre patterns. That structural information helps restorers, informs conservation, and guides algorithm design. If the model knows a patch lies on a sharply curved fold, it can compensate for distortion before testing for ink.

The scans also highlight the scale of the work ahead. Many rolls are bigger than they look, with dozens of layers packed into a single visible ridge. What looks like one page may be ten. Virtual tools make those pages accessible without a single cut, yet they still require time, compute, and verification. Reading a library remains a marathon, not a sprint.

Where the imaging happens

Several facilities support the effort. University labs handle micro-CT scans and controlled experiments on known fragments. National light sources contribute phase-contrast CT and high-energy imaging. Each instrument adds a piece to the puzzle—resolution here, contrast there, throughput elsewhere. Together they provide the slices, blocks, and beams that virtual unwrapping needs. As the workflow improves, scans become faster and models more accurate. The practical goal is simple: move from a few columns to whole scrolls, then from a handful of scrolls to a shelf.

Progress often depends on patient engineering. Better sample mounts reduce motion. Smarter reconstruction reduces noise. Improved segmentation follows fibre paths more faithfully. These incremental gains look small on a lab note; on a 50-centimetre roll they add up to a readable chapter.

Checks, balances, and avoiding wishful readings

Because the ink signal is subtle, the community has built guardrails. Teams publish model architectures and validation strategies. Multiple pipelines verify the same patch. Independent reviewers examine whether strokes align with papyrus fibres, whether letter shapes match the script style, and whether vocabulary fits context. When claims survive this scrutiny, confidence grows. When they do not, the images go back in the queue for rework.

That discipline pays off. It keeps excitement honest and prevents a flood of weak “finds” that would erode trust. It also protects the fragile relationship between computer vision and classical philology. Each must respect the other’s strengths. The result is a shared standard: show the patch; explain the model; justify the reading.

Beyond one scroll: a roadmap for a buried library

Reading one roll is proof of concept. Reading a shelf is rescue. The roadmap includes higher-throughput scanning, better layer tracking, semi-automated stitching of segments, and language models tuned to ancient Greek that can suggest but not overrule human readers. The dream extends further. If excavations one day recover deeper rooms at the Villa of the Papyri, a second library may emerge. Should that happen, the tools now maturing will be ready.

In the meantime, the current batch of scrolls is more than enough to occupy teams for years. Each new patch calibrates the next. Each column opens a path for commentary. Each translation anchors a footnote that once seemed fanciful. It is slow, patient work—the kind that leaves a field changed when you look up a decade later.

Common questions, answered simply

Is this “AI reading the past” on its own?

No. Models detect likely ink. People read the letters, test interpretations, and argue about syntax, as they should. The partnership works because each side does what it does best.

Are the images edited?

The virtual pages are reconstructions from the scan. Pipelines document each step, from segmentation to flattening to ink detection. Reviewers demand that the same result appear across different models and runs before accepting it as text.

What about errors?

Mistakes happen. Artefacts can mimic strokes. That is why teams cross-validate and publish methods. If a reading fails replication, it is revised or withdrawn. The process is designed to learn in public.

What this changes for classics and history

First, it increases supply. More texts mean broader arguments and fewer gaps in chains of citation. Second, it rescues voices outside the standard canon. Epicurean works dominate the known rolls, but even within that school we may find authors and genres that rarely survive elsewhere. Third, it refreshes method. Philologists now learn to read volumes and patches, not just photographs. Computer scientists learn to think in accents and scribal habits. That cross-training will outlast this project.

Finally, it repositions hope. For years, people spoke about the library as a lost treasure. Now they speak about it as a working archive. The difference is subtle and powerful. A treasure is admired. An archive is read.

Where this leaves the rest of us

If you care about the ancient world, this is good news. If you care about what AI is for, this is a model. It shows technology serving a clear human aim: understanding words left by people who thought hard about how to live. The story also shows how open data and public competitions can accelerate careful research without sacrificing rigour. Headlines come and go. The text on a page does not. Once a line is secure, it will be read for as long as people read Greek.

The old nightmare was that the great books were gone. The new reality is that some of them are back, line by line, with enough clarity to teach, provoke, and delight. That is worth a sober celebration—and a fresh budget line for scanners and servers.